Educators across Australia are always trying new ways to improve outcomes for their students. Not all changes result in improvements. Is there a way that we increase the likelihood of a change leading to improvement?

There are two ways that we can increase the likelihood of a change to lead to an improvement: plan the change within the scaffold of an improvement cycle; and, ensure that decisions are based on the best available evidence about what is likely to work.

Making evidence-based decisions in an improvement cycle draws on both external evidence and practice-based evidence (Vaughan, Deeble & Bush 2017). In order to improve learning, educators need to access high-quality evidence based on empirical research to make informed decisions that are then supported with reflections from evidence that has been put into practice. As Sharples (2013) argued, evidence is not a replacement for professional judgement and experience. Evidence is used in conjunction with professional judgement.

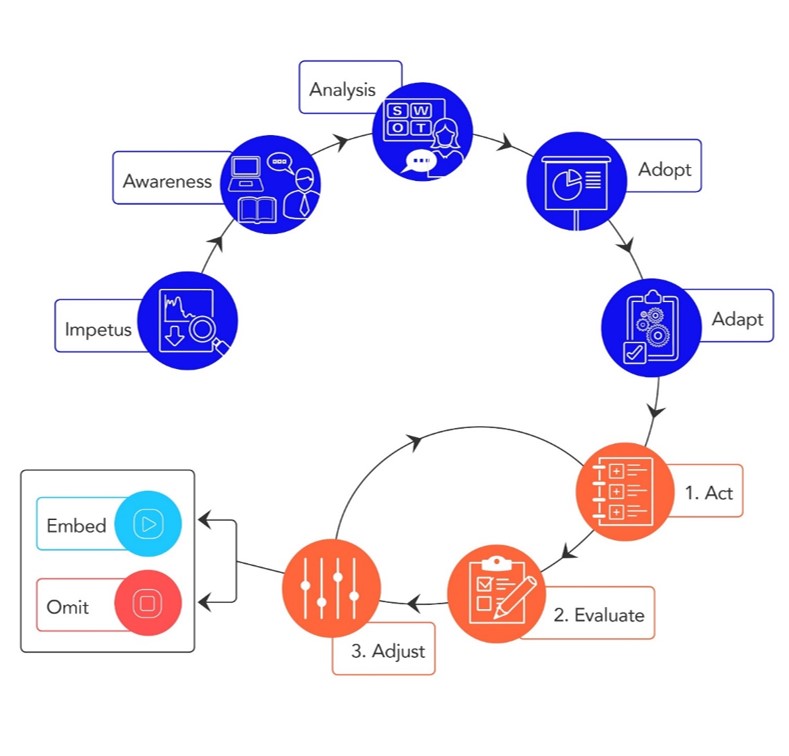

Evidence is the starting point for making decisions. An improvement cycle helps educators structure a change based on the growth that they want to see. Such cycles provide educators with a framework to plan, identify, track and monitor the evidence about and for learning to make improvement and impact more intentional. At Evidence for Learning (E4L), we have the Impact Evaluation Cycle (Figure 1) and the Education Action Plan (EAP) (that is aligned to the Impact Evaluation Cycle) as ways to structure a school or system improvement process. This article explores how these models are put into practice at the system and school levels.

The Impact Evaluation Cycle and Education Action Plan

The Impact Evaluation Cycle is a 10-stage process that starts from outlining the improvement challenge specific for the school context, designing and implementing an approach, to developing an evaluation plan to measure and evaluate its effectiveness. It is not a static process. Educators can decide the stage at which they want to operate in the Impact Evaluation Cycle, depending on their context and current situation. For example, if an intervention has already been implemented, educators can choose to assess the Act, Evaluate and Adjust stages of the cycle. Examples of how these stages can be put into action are provided in the discussion below.

Figure 1: An impact evaluation cycle. (Source: Evidence for Learning, 2018).

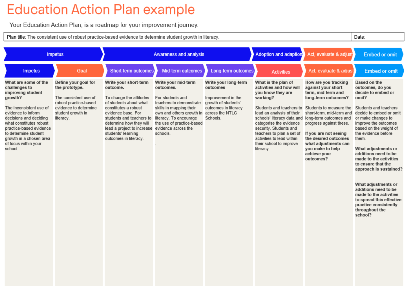

The EAP is a road map for an improvement journey. It is free to access from the Evidence for Learning website. The EAP provides a supporting resource for decision-making as teachers or leaders plan and implement each step of the cycle. The educator outlines within the EAP:

- Where are you going?

- How will you get there?

- What will tell you that you have arrived?

One of the most valuable elements in the EAP is the intentional work to devise short-, mid- and long-term outcomes and the activities that will get there. The EAP (Figure 2) provides further scaffolding to the change process at the classroom, school or system level, with a series of questions mapped to the Impact Evaluation Cycle.

Click here to view an enlarged version of the plan below.

Figure 2: An example of an Education Action Plan. (Source: Evidence for Learning, 2018).

Examples of use at a school and system level

Educators across Australia have embedded the Impact Evaluation Cycle into their work. Some examples of this include Cath Apanah, Assistant Principal of Montrose Bay High School in Tasmania. Apanah and the leadership team are using the Impact Evaluation Cycle to structure their work of implementing a coaching model across the school. Working with Monash University in Clayton, Victoria with initial teacher education and Master's students, we adapted the EAP for beginning teachers.

Over 3500 kilometres from Melbourne, John Cleary, Principal of Casuarina Street Primary School in the Northern Territory is using the Impact Evaluation Cycle at a system level. He is working across 16 schools in Darwin and Katherine leading the Northern Territory Learning Commission (NTLC), engaging students, teachers and leaders to embed evidence as the key driver for decision making. This has grown from several schools in 2017. The students and teachers in the NTLC collect evidence to inform designing the project that is scaffolded by the Impact Evaluation Cycle and the EPA. Within this project the students are aspiring to improve their outcomes in literacy. We describe the work to date on this project in more detail below.

Working with students

In thinking about leading change in schools, there are many places a leader or teacher can start from. All successful change will involve stakeholder engagement; that is engaging with staff, students and the wider school community, including partnerships with external organisations (Caldwell & Harris, 2008; Dinham 2008). External partnerships can be helpful to encourage improved outcomes for students (Caldwell & Vaughan 2012; Vaughan 2013; Vaughan, Harris & Caldwell 2011). In the evaluation of The Creative Arts for Indigenous Parental Engagement, which drew on the cultural capital of the students and parents, there were significant increases in attendance and literacy outcomes (English grades and NAPLAN results) (Vaughan & Caldwell, 2017). Evidence for Learning is now partnering with Cleary to work directly with students to drive change within and across schools in the Northern Territory.

It has been working in partnership with a group of schools in the NTLC since June 2017. We are part way through the project, so we cannot speak to changes in outcomes currently, although what we can describe is how the Impact Evaluation Cycle and EAP is being used to structure change in these schools.

Here are the stages of the Impact Evaluation Cycle and the questions that we used to scaffold the change with a worked example from the NTLC.

Impetus. What are some of the challenges to improving student growth? The inconsistent use of evidence to inform decisions and deciding what constitutes robust practice-based evidence to determine student growth in a chosen area of focus within your school. What are the key data statements that lead you to believe this is an area of need? How valid and reliable is the data you have collected? Are there consistencies across a range of available data sets? Finally, how can our students' experience provide the narrative behind this data as a crucial step to understanding it more fully? This impetus can often be progressed through too quickly as schools seek to respond to data they are yet to have fully considered. Spending more time in this stage aims to ensure all available information has been collated for analysis.

Define your goal: The consistent use of robust practice-based evidence to determine student growth in literacy.

Awareness and Analysis. Short-term outcomes: To change the attitudes of students about what constitutes a robust evidence base. For students and teachers to determine how they will lead a project to increase students' learning outcomes in literacy. This is scaffolded by workshops where Cleary and Evidence for Learning works with the students and teachers. Within these workshops the students would categorise their teachers' evidence of learning in reading according to if it was qualitative and quantitative and the strength of the evidence. We adopted the lock rating system that we use on our Teaching & Learning Toolkit for the students to rate the security of the evidence (Education Endowment Foundation, 2018).

Mid-term outcomes: For students and teachers to demonstrate skills in mapping their own and others' growth in literacy. To encourage the use of practice-based evidence across the schools.

Long-term outcomes: Improvement in the growth of students' outcomes in literacy across the NTLC schools.

Adoption and Adaption. What is the plan of activities and how will you know that they are working? Students and teachers to lead an analysis of their schools' literacy data and categorise the evidence security. Students and teachers to plan a set of activities to lead within their school to improve literacy.

Act, Adapt and Adjust. Students to measure the short-term, mid-term and long-term outcomes and progress against these.

Embed or Omit. Students and teachers decide to embed, omit or make changes to improve the outcomes based on the weight of the evidence before them.

Focusing on what's important

The EAP is a model for systems, schools and teachers to structure a change. By thinking through the why, how and the what of the process prior to beginning and then using the EAP to track their progress, educators can be focused on what is important for their school. They can use evidence to inform their plans and practice-based evidence to determine if the change has had the desired impact. This is a continuous cycle of change driven by evidence-based decision-making and gathering practice-based evidence to make thoughtful changes to improve the lives of their students.

Fitting as a conclusion for this article are the words of Professor Brian Caldwell: ‘There are as many challenges for governments and school systems as there are for schools. We can't keep doing the same things that have not worked well, even though we try harder and allocate more funds to support our efforts. An innovative nation calls for an innovative profession in innovative schools.' (Caldwell, 2016, p. xvi).

References

Caldwell, B. (2016). The autonomy premium: Professional autonomy and student achievement in the 21st century. Australian Council for Educational Research, Camberwell.

Caldwell, B.J., & Harris, J. (2008). Why not the best schools? What we have learned from outstanding schools around the world. ACER Press, Melbourne.

Caldwell, B.J., & Vaughan, T. (2012). Transforming Education through The Arts. Routledge, London and New York.

Dinham, S, (2008). How to Get Your School Moving and Improving. Australian Council for Educationl Research, Camberwell.

Education Endowment Foundation (2018). Evidence for Learning Teaching & Learning Toolkit: Education Endownment Foundation. Viewed 2 May, 2017 http://evidenceforlearning.org.au/the-toolkit/.

Evidence for Learning. (2018). Impact Evaluation Cycle, viewed May 16 2017, http://evidenceforlearning.org.au/evidence-informed-educators/impact-evaluation-cycle/.

Sharples, J. (2013). Evidence for the frontline: A report for the alliance for useful evidence. Alliance for Useful Evidence.

Vaughan, T. (2013). Evaluation of Bell Shakespeare's Learning Programs. Bell Shakespeare, Sydney.

Vaughan, T., & Caldwell, B.J. (2017). Impact of the creative arts indigenous parental engagement (CAIPE) program. Australian Art Education, 38(1), 76.

Vaughan, T., Deeble, M., & Bush, J. (2017) Evidence-informed decision making. Australian Educational Leader, 39(4), 32.

Vaughan, T., Harris, J., & Caldwell, B. (2011) Bridging the gap in school achievement through the arts: summary report. The Song Room, Abbotsford, http://www.songroom.org.au/wp-content/uploads/2013/06/Bridging-the-Gap-in-School-Achievement-through-the-Arts.pdf.

Think about an approach or program you’ve implemented in your own school: What did you want to achieve? Did you define your goals? How did you measure and evaluate its effectiveness?